Sequences

| Tags | MATH 115 |

|---|

PROOF TRICKS 🔨

- Proof by number line visualizations (good for intuition). Circle an epsilon radius around the point and see if you can make any deductions.

- when you are dealing with things in the form , triangle inequlaity should be in your toolkit

- Never use infinite behavior for limits, i.e. you can’t say if , then at the limit , as this is hard to understand. Always go back to the original definition.

- If , we know that and . You get two bounds from one.

- conversely, to show inequality, we need to show two bounds or show that and .

- To universalize, or to specialize?

- If you want to show a limit exists, you need to universalize an epsilon

- If you want to show that a certain property holds given that a limit exists, you might want to put your thumb down on an epsilon

- Specifically, if you care about a certain property of , but you can split the number line at any .

- if you’re dealing with some bounds , it’s often easy to let so that both are satisfied

- Redefining . Sometimes, you might start with a limit definition and end with another limit definition. Therefore, it might be critical that you use some different for the first limit definition, like . This is legal because you can just substitute in your . However, it is helpful because maybe later you want to combine things to a singular epsilon.

- “reverse distribution”

- The delta / 2 trick. This is particularly useful if you have some number . As an example, take where is the greatest lower bound of a set and . You say that , and you say that is no longer a lower bound by definition of , which means that there must exist another between .

- we use delta / 2 to avoid an edge case.

- split up into an infinite and finite case

Roadmap to convergence 🔨

- If the sequence is bounded and monotonic, then it converges

- implicitly, we also know that it converges to the infimum or supremum

- If the sequence is monotonic in general, then it may diverge to infinity or converge

- If the sequence is not monotonic, then we need to use to make the judgement if the limit exists or not.

- However, we can also check the cauchy sequence property, because we’ve shown that a cauchy sequence is the same thing as a convergent sequence

- this is particularly useful if you have absolutely no idea what the limit could be, like in terms of a recursive sequence where you only get a bound, like

Sequence Definitions 🐧

A sequence is just a function with a discrete domain where or . The function is typically denoted as , and you might denote the lower and upper bounds as , or just . Or, if the domain is well-established, we just use .

The sequence yielded by can have repeated values, but we call the set of values as just the unique values. Sequences are denoted by and sets are denoted by .

The limit of a sequence is what approaches for large values of .

We say that a sequence is bounded if for all .

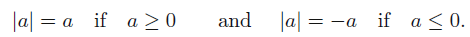

Absolute value definition 🐧

(this helps for certain proofs with limits)

We define the following from an absolute value

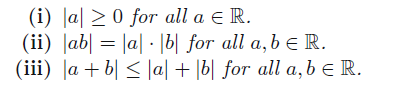

Absolute value properties 🚀

These have three properties

Proof sketch

- If , then from our ordered field axioms, we are done. If , then from our field axioms, we are also done

- Four cases. Just enumerate them

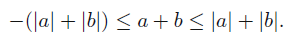

- This one is actually pretty cool. We know that and the same for . Therefore, we can add them to each other, yielding

We conclude that and that , and that’s why .

We actually call (III) the triangle inequality and it is very useful.

Whenever you get something like , it means that is within a certain radius of , so you get two inequalities from this.

Limits

Formal definition 🐧

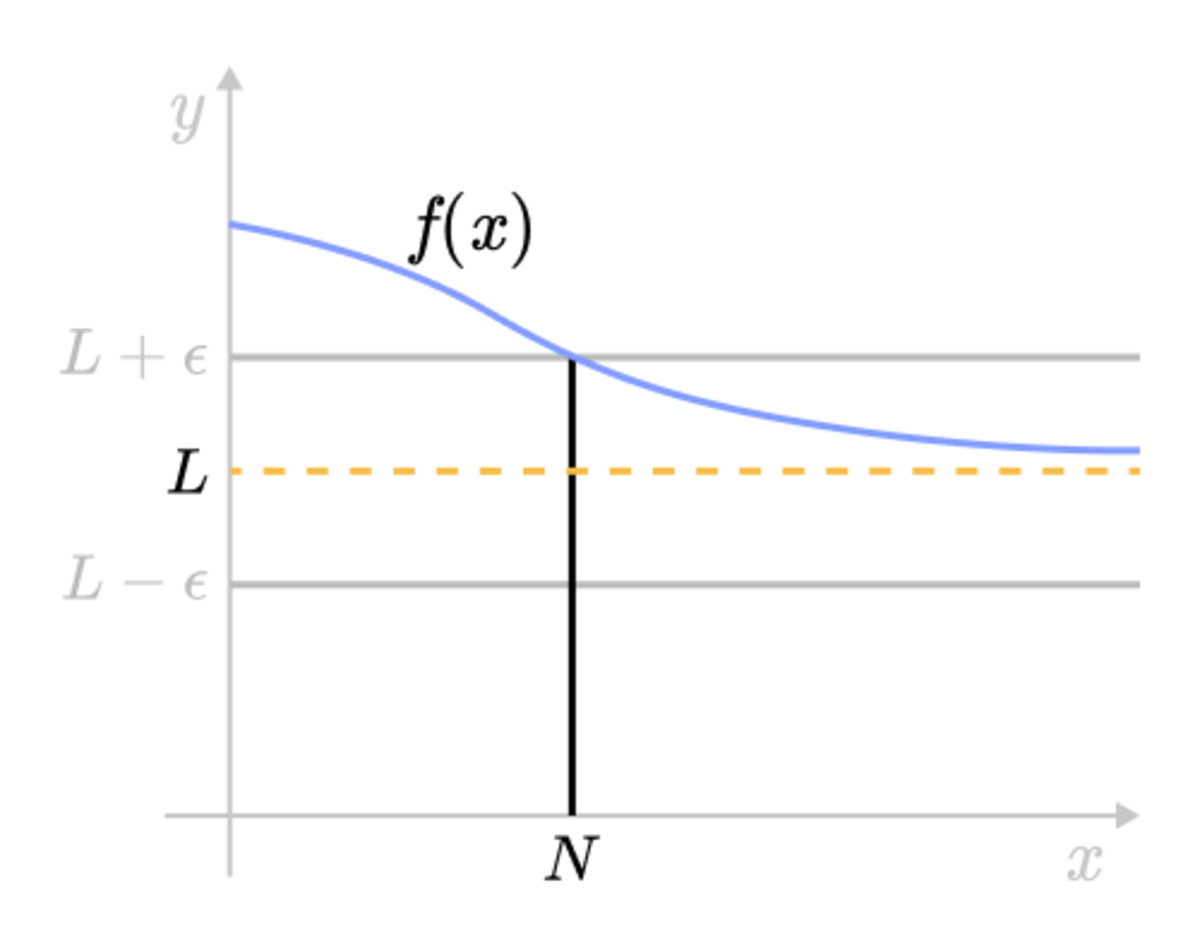

A sequence converges to if for every there exists some such that .

Conversely, we say that a sequence diverges if no such number exists (and a formal defintion, see below). It doesn’t have to diverge to infinity. it can just oscillate, like .

In english, we say that for any non-trivial radius, we can find a number large enough to satisfy this radius. This is the fundamental definition of limits and we use it to prove limit properties that we use in calculus class. We also call these delta-epsilon proofs.

Here’s a good diagram that can help

Limits to infinity 🐧

If a limit , we use the following strict definition: for all , there exists some such that implies that .

In english, it means that you can always find a segment of the series larger than some number.

For negative infinity, we just say that for all , there exists some such that implies .

Uniqueness of limits 🚀

if , then

Proof (squeeze)

If we assume that such exist, then we have some , and we also have . These ’s might have different though.

By the triangle inequality, we have

Therefore, for all , so it must be that .

Note that we used triangle inequality because we wanted to squeeze things.

Alternative proof (contradiction by choosing epsilon)

We try a contradiction. We set a value . By the limit defintion, if we let , we have and . We also know that

However, we’ve established that the left hand side is , and it’s not true that , so we get a contradiction.

Tricks about proofs 🔨

When we prove a limit goes to , we let and we want to end up at some definition of in terms of such that .

To do this, we often work backwards. We start with . Because is a function, we can often move things around in the inequality such that we have an inequality , and then we let , and then you show the forward direction.

Example proof

Show that . Proof: let . If , then , which means that , implying that which is the claim we wanted to show. See how we went forward in the definition, but to get the original , we went backwards.

For harder functions, you have a few tools in the toolkit

- You don’t have to show the smallest possible . So, you can upper bound to a simpler function , and show that by finding an (pro-tip: you are often given two bounds: one where the upper bound is satisfied, and one where is satisfied. The must be the maximum of those values). [KEY FACT: you’re bounding , NOT .]

- for divergences, consider lower bounds, which are the exact opposite

- If a limit exists for , it also exists for .

Disproving limits (proving divergence) 🔨

You can either

- Assume that it converges and derive a contradiction

- Show that for all and , there exists some such that for some .

- show that two subsequences yield two different limits (more on this later)

Limit Theorems

List of proven theorems 🚀

- If for all , then

Proof (start with contradiction, and use epsilon to show contradiction)

Let . Suppose that . Then, , and we can set . Now, we know that for some , and for some . Let . From triangle inequality, we get

But we just defined , so we get a clear contradiction: . Where does the contradiction come from? Assuming that !

- Convergent sequences are bounded

Proof (Pick epsilon and show boundedness)

Let . We are dealing with , and a useful trick is to put a hand on , because that’s not the number we are trying to show. We are trying to show across .

Let , so we have for some . This means that and . Automatically, we get upper and lower bounds for . Now, we do have all , but this is a finite discrete set, so it also has upper and lower bounds. Select the upper bound as either or , depending on which one is larger. Similarly for the lower bound.

- .

Proof (direct proof through limit definitions)

let be the limit. In this case, we have , as we can just make a new . So, we can just mlutiply everything through to get , proving that is the limit of .

-

Proof (direct proof through limit definitions)

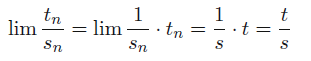

Similar technique. We know that , and for all (where N_s, N_t were the original bounds).

We add them together and use the triangle inequality

and so we showed that is the limit.

-

- Corollary .

Proof (use definition of limits and boundedness)

We start by using a trick:

which by the triangle inequality yields

We get a hint of how it will play out. We know that for some , because it’s bounded. We also know that (because you can just redefine what is. Similarly, we know that and we can set . From this, we know that

and

which means that , as desired.

- if

Proof (use boundedness)

We establish that

as are two constants that can be part of the epsilon. Now, we know that

And we also assume that is bounded by (as it converges). So we know that

and using the original definition, we get

and we are done.

-

- If and , then

Proof

If we assume that exists, then implies that (see theorem (1)). Repeat this for the other side, and you get , and so , and this forces us to conclude that .

Proven limit corollaries 🚀

-

- if

-

-

Infinite limit theorems 🚀

- If and , then .

Proof

Because is unbounded, we know that for all , there exists some such that implies that .

If we select a real number , then we know that for some .

Therefore, for all , we have , as desired.

- if and only if . (assuming that .). The opposite is NOT true: if you know that , then you don’t know that is. Take , for example.

Proof

We show both directions.

Forward direction: let and define . This is legal, because the range of is still . Therefore, there exists some such that for all . Therefore, we have . Because , we have

which proves the forward direction.

Backward direction. Use a slightly inverted way of thinking. Let and let . By definition, there exists some such that .

Because , we have , which means that for all , and so we are done.

Computing vs proving limits 🔨

If you want to show that , then you whip out your formal definitions and you might do some lower bounding, upper bounding, etc.

When they say “compute the limit using the formal definition”, then propose a limit and prove the rules

If you want to compute a limit, you need to work from the limit lemmas

Tricks for computing limits

- Fractions are your friend. If you don’t have a fraction, make one! A common trick is to

Monotone Sequences

A sequence monotonically increases if for all . We are talking about monotone sequences because it can help us show convergence even if we don’t know the exact limit.

Bounded Monotone Theorem ⭐

Theorem (1): if a sequence is monotonically increasing and has an upper bound, then it converges.

Proof

Let the limit of be , where . We want to show that .

Well, we invoke the supremum definitions and say that can’t be an upper bound, so there must exist some such that . Now, we also know that for all , so we have . Therefore, we have , and because (supremum definition), we also say that , which is the limit definition.

Corollary: the limit of a bounded monotoically inceasing sequence is , where . We just assumed that this is true and proved it in the proof above which also proved theorem 1.

Sub-corollary: if a sequence is monotonically increasing, for all . (comes from definition of supreum)

Theorem (2): if a sequence is monotonically decreasing and has a lower bound, then it converges. The proof is exactly the same approach as above, the the correlary is that the limit of a bounded monontonically decreasing sequence is .

Using this theorem 🔨

Sometimes, you can have sequences that are defined recursively and it’s very nasty to compute the actual limit. However, if you can show that the sequence is monotonicaly increasing and has an upper bound (or vice versa), you can show that it indeed has a limit.

More specifically, you need to show that , which means that it’s a monotone sequence. You might proceed inductively and say that we assume that this is true and then show that . If this is legal, you will get back some fact about which is assumed in the inductive hypothesis. Often, you need to provide a lower bound if you want to show that the sequence is decreasing (not just to use the theorem but also to show that it is monotonic). Conversely, you often need to provide an upper bound if you want to show that the sequence is increasing. These are just vague tips which can come in use.

Just remember…you need to prove the base cases too!

Application: Cauchy Cantor / Nested Interval Theorem 🧸

This theorem states that there exists at least one such that

Where . Graphically, it means that if we apply tighter and tighter bounds, we will eventually converge to a non-empty interval.

Proof (uses monotonic sequences and inf/sup relationship)

By bound definitions, we know that for every .

To prove this, we just want to show that , set-wise. This is because we know that is non-empty, and therefore the original thing is non-empty.

We need to show two directions. Direction 1: .

For this direction, we use a proof by contradiction because there are ton of restrictions to do the forward directions. So let’s suppose that .

Case 1: . Intuitively, this is a contradiction because you can pick some such that , which means that it wouldn’t satisfy the bounds . But let’s rigorize this. Because we’re using limits and we want to show something about the limit property, we try to pick an epsilon. Let . Therefore, there must exist some such that for all , we have . And graphically, this means that there exists this , which yields a contradiction.

Case 2 is done in a similar way

Direction 2:

This direction is actually pretty simple. We know that which means that for all as is monotone. Similarly, we know that which means that because it’s monotone.

Subsequences

Definition 🐧

A subsequence is defined as , where indexes a sequence of monotonic numbers which are used to index the original sequence . In other words,

which we can understand as selecting a bunch of ’s taken in order. All subsequences are infinite.

We can also understand it as a function composition. We can see the sequence , and our selection as . The subsequence is .

Same-subsequence theorem 🚀

If a sequence converges, then every subsequence converges to the same limit

Proof

Let be a subsequence of . We know that for all (think about it…or show it inductively). Let . Therefore, . If , then , so . Therefore, .

As an aside, if a sequence diverges, it is not the case that every subsequence diverges. Consider the example . You can select all odd and this converges.

As another aside, if a sequence has two subsequences with different limits, then the sequence diverges.

Boizano-Weirstrass Theorem ⭐🚀

Every bounded sequence has a convergent subsequence.

To prove this, it is sufficient to show that you can make a monotonic subsequence from any sequence, and then by the monotonic sequence theorem, we conclude that it converges.

Proof (infimum definitions, delta / 2 contradiction trick)

Let be the set . Because is bounded, we know that is finite. We define

Graphically, you can imagine this being a vertical line in the sequence plot, being everything to the right of it, and being the greatest lower bound of whatever is to the right of .

Case 1: is in the set for all . Say you have a subsequence so far. This means that has a minimal element, . If this is the case, then assign , and make a new set (i.e. chop off everything up to and including this term in the original sequence). Now, we know that that because it’s a subset (we removed one element). Therefore, when we pick the next element according to this rule, it will be at least as large as , meaning that it is monotonic.

Case 2: There exists some such that is not in . Now, consider the last element . The distance which is non-zero. Now, consider , which can’t be a lower bound. Therefore, there must be some and , and by construction, . Note that this by construction. We can keep doing this for any , and so we construct a monotonically decreasing subsequence.

Subsequence-convergence theorem (extra) 🚀

Theorem: if is a sequence, then

- There will be a subsequence of that converges to if and only if is infinite for all

- If is unbounded above, it has a subsequence with limit

- If is unbounded below, it has subsequence with limit .

Subsequential limit (extra) 🐧

We define the subsequential limit as the limit of any subsequence of . Now, if converges, then we know that there is only one such limit. But if doesn’t have a limit originally, then we are in interesting territory. We can define as the set of subsequential limits of .

Subsequential limits and lim sup, lim inf (extra) 🚀

For any sequence, there exists a monotonic subsequence whose limit is and a monotonic subsequence whose limit is .

We can unify the idea of in this theorem:

if , i.e. the set of subsequential limits of , then

- is non-empty

- and

- exists if and only if has only one element, .

Proof

1 is the immediate consequence of the previous theorem, because there must exist at and , which is at least one number or symbol

2 we can use the definitions. Consider any limit of subsequence . Then, we have . Because we know that , we have that . Therefore, we have

and this is true for all elements in , so . From the previous theorem, we know that , and so we know our greatest lower bound and our least upper bound

3 is a reformulation of the lim-sup convergence theorem

Lim Sup and Lim Inf

Defining 🐧

We define the following

Again, unlike our discussion above, we don’t restrict to be bounded, so this can be infinite. In our previous discussion, we had . Consider a function that decays over time to zero. The supreum will be much larger than , which goes down to zero.

Properties of lim-sup 🚀

The is basically taking the supremum of a bound, and the limit is drawing the bound tighter and tighter. Therefore, it must be the case that

for any . Simialrly,

this is true for any inf and sup: if the modifying function is monotonic, then . This is because it just moves the elements of the set without mixing up their order. Same for sup.

Lim-sup Theorem ⭐🚀

The bounded sequence converges if and only if .

Proof (forward: limit definition moving to infimum and supremum definition. Backward direction: sandwich theorem)

(we need bounded, because not all sequences have .

Forward direction: if converges, then let . Now, we know that for all . Graphically, this looks like a region where all lie.

Let . Therefore, . If we define , then . We know by default that , which means that

Now, this is actually true for all because of the construction of and , as the larger the , the smaller the subset. Infimums of subsets are always larger than the original set, and supremums of supsets are always smaller than the original set.

So, if we have , for all , and we recognize that this means that , which satisfy the limit definitions as desired.

Overall:

- Expand limit definition

- Recognize infimum and supremum

- Recognize that we can reshape the inequality into limits

Backward Direction: if , then converges.

Well, this one is trivial. We know that by definition, for all . By the sandwich theroem, if the limits are the same, then we have

Cauchy Sequences

The definition 🐧

A sequence is a Cauchy sequence if for every , there exists some such that implies that .

Graphically, it looks like a certain radius in which all pairwise elements are within some distance of each other.

Cauchy equivalence to convergence ⭐🚀

Theorem: a sequence is a convergent sequence if and only if it’s a cauchy sequence

Proof (forward: triangle inequality. Backward: picking an epsilon, proof by contradiciton starting with cauchy and incorrect assumption about limits yielding contradiction)

Forward direction: if is convergent, then for all . Using the triangle inequality, we have , which is the definition of a cauchy sequence.

Backward direction: We want to show that a cauchy sequence is bounded and , which allows us to show that the sequence converges.

Let’s start by showing that the cauchy sequence is bounded. We start with for some . Now, we want to show that the whole sequence is bounded, and we see a reasonable split between and . The location of the split is arbitrary. So, let .

Therefore, for all . We can put our thumb down on some . Therefore, we have for all . This last step is another good trick to have, because we are eventually pushing towards the bounding case. Here, we have just accomplished that. We have shown that for all , all are below a certain fininte bound. Now, we have left the , but this is trivial because it’s a finite set. A finite set is always bounded. If the infinite is bounded, and the is bounded, then we conclude that the whole set is bounded.

Next, we want to show that .

To do this, let and . It is sufficient to show that for some . You can derive this through the triangle inequality.

Because things look a bit daunting, let’s start with a proof by contradiction. Let’s start with the cauchy assumption that for some .

Now, let’s say that . Now, let’s pick a perturbation . We know that isn’t a lower bound, so there exists some and similarly, we know that isn’t an upper bound, so there exists some . Note that we implicitly have .

Now, we know that because , so we get that . From the assumption , we get that . This is a contradiction to the original setup by the cauchy sequence, so the whole thing is a contradiction. We are done.

Using Cauchy for recursive definitions 🧸

Suppose that you had . Well, you can try to push it to the cauchy definition by computing , which you do recursively: , and the first term you can compute recursively. For this particular problem, you get that ,

Now, because decreases with larger , we can just set , which means and we see that for all , the equality is satisfied .