Dual Gradient Descent

| Tags | Constrained |

|---|

Dual Gradient Descent

This is how you can turn a constrained optimization problem into an unconstrained.

Let’s say you wanted to do

You can set up the lagrangian:

Now, we propose that we can yield a constrained solution with the following objective:

Why? Well, let’s set up two scenarios

- Scenario 1: . In this case, when we run the inner optimization, the best value we can get is using . Then, the outer optimization is the unchanged .

- Scenario 2: . In this case, the inner optimization will diverge, as the minimum is achieved when , and there’s no way to recover in the outer loop.

Therefore, we see that using this objective, the only viable solution will follow the constraint

Implementation

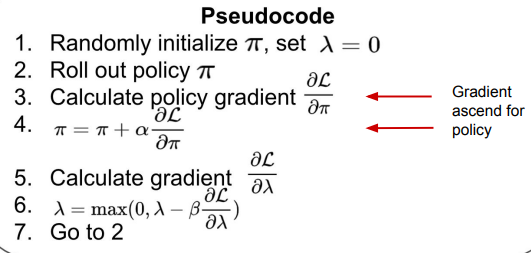

In practice, we just alternate a gradient WRT and the policy.