Constrained Optimization

| Tags | Constrained |

|---|

Constrained optimization

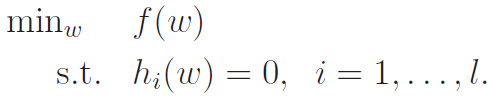

Consider the following problem:

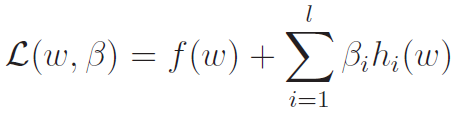

You can use the legrange multiplier method to solve this. As a shorthand, we can also use the Lagrangian, which is expressed below

This is convenient, because the Lagrangian maps the constrained optimization problem into an unconstrained optimization problem. The critical points where are your critical points on the original constrained optimization problem.

This is super helpful for simple constraint problems. But now we will concern ourselves with optimization problems with constraints, which can be more common.

Generalized Lagrangian

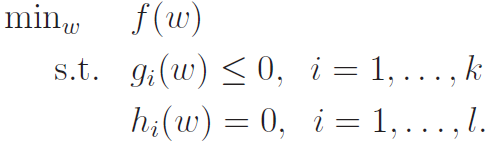

In addition to equality, the generalized optimization problem has inequalities as well. We call these primal optimization problems.

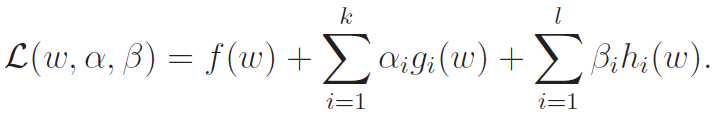

We define the generalized Lagrangian below:

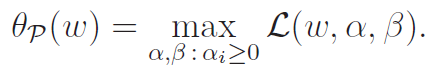

Now, how the heck are we going to use this? Well, we could consider defining a maximization problem as below

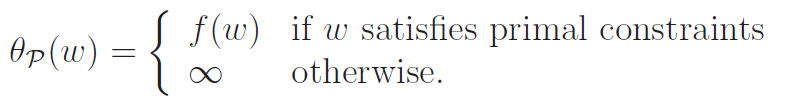

(the stands for primal.) Now, let's understand why this makes sense. If , we see that . this is because you could make the arbitrarily large. If , then the same thing happens. We can make the arbitrarily positive or negative to make it infinity. It is only when these conditions are satisfied that is finite.

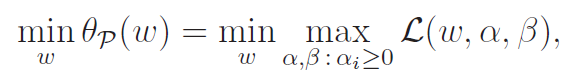

As such, we can reframe our objective as a min-max problem

We define , and when we find that minimum, we can obtain the argument that minimized

Legrangian Duals

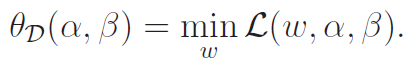

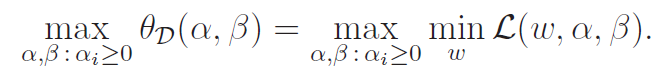

Now, we are going to dive a little deeper on min-max vs max-min. We propose a dual as defined as follows:

The dual optimiazation problem is thus

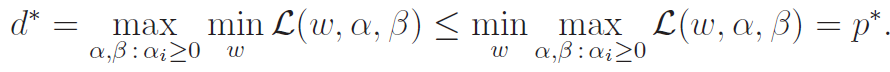

Now, what is the difference between max-min and min-max? The maximum of the minimums is smaller than the minimums of the maximums. This the idea that the highest chair on the floor is still shorter than the lowest bump on the ceiling. As such, we get

Necessary dual conditions

There are some conditions in which , which allows us to solve the dual problem instead of the original problem. This is beneficial because the min is unconstrained, while the max is constrained. It might be easier to do unconstrained optimization first.

First, we suppose that are convex (hessian positive semidefinite) and that is affine (linear with intercept). With these exceptions only, we can say that !! Nice!

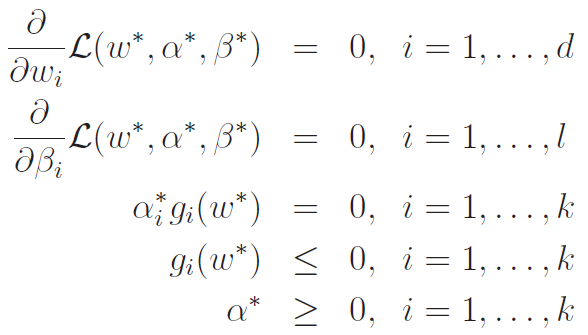

Interestingly, under these conditions, we get that satisfy the Karush-Kuhn-Tucker (KKT) conditions:

Specifically, we care about This we call the KTT Dual Coomplementarity condition. This shows that in optimality, if is non-zero, then , meaning that it is at its limit. We call this constraint as being active, as we have turned the inequality into an equality.