Black Box Meta-Learning

| Tags | CS 330Meta-Learning Methods |

|---|

Meta-Learning as a sequence model

If you have a bunch of data in , you can imagine feeding the data into a network like you’re teaching a student, and then querying the network with the test data. A good way of doing this is through a recurrent neural network.

Version 1: hypernetwork

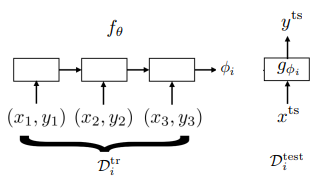

You train a neural network that outputs , and then you predict test points with .

This is typically a recurrent-style neural network and the whole graph looks like this

You train this with supervised learning by pushing as close to the predicted values as possible, which propagates back into .

The problem is that this is a whole neural network, and so this doesn’t seem that scalable! Some solutions exist that parameterize one layer at a time, or limits the adjsutable parameters. But ultimately it can be too complicated.

Version 2: test set as the last input

You might be saying to yourself, well why don’t I use as part of and use , the hidden state of the RNN to represent the meta-learning? This is more similar to how we work. When we learn something, we don’t make a new brain; we just make a representation of the stuff.

Indeed, this is something you can do! Just feed the test set as the last input, and you zero-out the label because you don’t have it. Methods like MANN and SNAIL do this.

Pros and cons

Black box models are expressive and easy to combine with other learning problems, like reinforcement learning.

Unfortunately, they are complex models and we don’t exactly know what’s going on. Moreover, it’s not that data efficient.

Language models as black box meta-learners

We can give large language models like GPT-3 a text prompt that tells it some relationship, and then it can solve similar tasks after looking at this text prompt.

The results are impressive, but the results depend on the type of prompt. Furthermore, the failure modes are unintuitive.

Some tricks here

- data should have temporal correlation and words should have many meanings (essentially to regularize)

- models should have larger capacity