Singular Value Decomposition

| Tags |

|---|

SVD (Single Value Decomposition)

SVD is just an extension of eigendecomposition where we have , where U and V are square, orthogonal matrices. The null space of is the same as (which means that if is square but non-invertible, may still be non-square).

- The is diagonal and referred to as

single values. It may not be a full diagonal

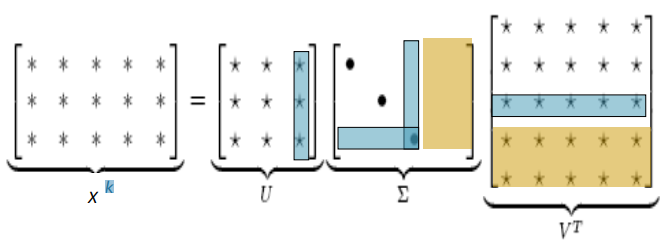

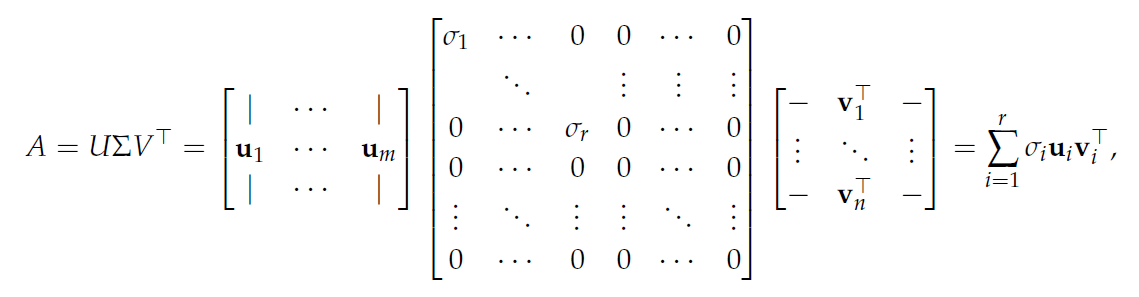

The full SVD:

So technically, the SVD is expressed as block matrix product

In this case, represents the null space of , and represents the complement of the range of . The SVD decomposes a matrix into the four fundamental subspaces.

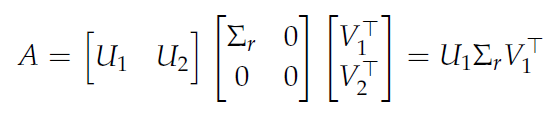

The compressed SVD:

You can just rewrite the SVD as , and that is sufficient SVD. In this case, would be , , and .

SVD on non-square matrices

The is in the output space, the is in the input space, and the is a rectangular projection matrix which removes a select number of dimensions before mapping it (invertibly) to the output. In other words, instead of being a scaling factor (in a full-rank square matrix), is a projection-scaler.

Good Visualization

You basically have as transformation matrices, and as selecting the relevant rows of and scaling them. Note that depending on which way the rectangle goes, this will reject whole rows or whole columns. You can sort of understand this as doing the projection in a clean way. You’re rotating onto a good cutting surface with , cutting with , and then re-rotating with .

Deeper theoretical look

Spectral theorem: for symmetric matrices, you can make an orthonormal eigenbasis such that where is the eigenbasis. Now, can we extend this idea to arbitrary matrices?

Essentially, SVD extends the idea of an eigenvector to non-square spaces. To be brief, where is the eigenbasis of and is the eigenbasis of .

Singular values

The singular values of are . Now, are the eigenvalues of the symmetric matrix . Now, if were shaped , then there will be singular values. We list these in decreasing order.

More specifically,

Singular Value Theorem 🚀

If you have a matrix , if are orthonormal eigenbasis for , then

- are mutually orthogonal

- proof: . Now, we know that are mutually orthogonal, so we are done.

- . Let . You can imagine that , which means that is the "eigenvector" of in the -space

- proof: we know from a previous result that . Because , the proof is obvious.

- More concretely: are eigenvectors of , and are eigenvectors of

- where and are orthogonal matrices and is a diagonal matrix.

- Proof: Consider , where is the column matrix of the orthonormal -basis. We now that , so we can make a column matrix of . This can be decomposed into a column matrix of and a diagonal of , with some sparseness (because recall that if , there's got to be some that maps to . Therefore, we get , which mean sthat as desired.

Reduced SVD

What does this mean in terms of the fundamental subspaces?

Well, the range of becomes the range of .

The range of becomes the orthogonal complement to the range of

The range of becomes the orthogonal complement to the null space of

The range of becomes the null space of

As such, the ranges of the four matrices cover the four fundamental subspaces of !! By selecting , you are essentially piecing together the behavior of .