Projections

| Tags | Math 104Math 113 |

|---|

The operator

is the set of all vectors such that for all . You can think of this as the annilator of a subspace in terms of our dual vocabulary.

Properties

Here are the properties (assume that is a subspace of

- if is a subspace, then is also a subspace

-

-

-

- if , then

-

-

- (basically the "not" of two things together is the intersection of what they're not.

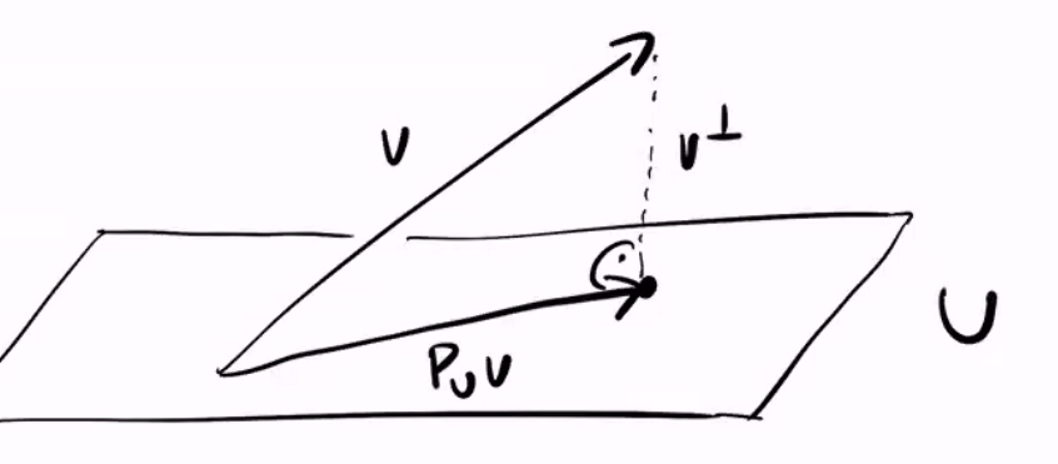

Projection operator

Inner products are understood as projections onto the target vector. Generally speaking, to project any vector onto , we have

Projection onto Orthonormal Basis

A projection operator is very simple with an orthonormal basis: given an orthonormal basis , we have

Projecting any vector onto any subspace is equivalent to projecting onto each individual component of its orthogonal basis and taking the vector sum (more on this later)

Properties

- (in other words, it is a linear map)

- (if )

- if

-

-

- (intuitively, this is because ; just rearrange this equation and you will see

- (makes sense)

- (also makes sense)

Projection as Contraction

You can prove that for all . Therefore, the projection operator vector is the closest vector to on .

Projections as matrices

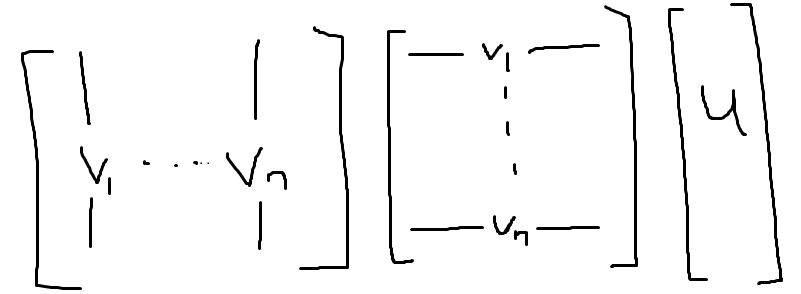

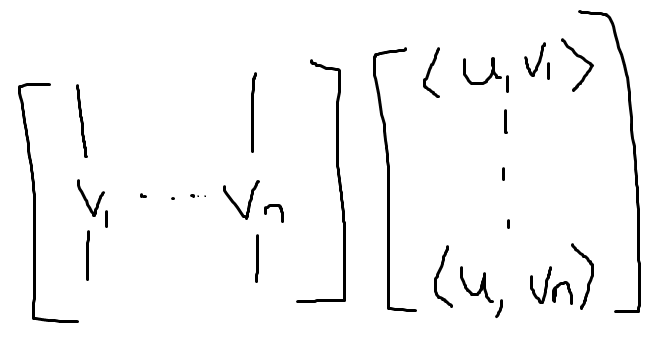

Suppose you had a set of orthonormal vectors that you wanted to project a vector onto. You would do the following:

As it turns out, if you let a matrix be the matrix whose column is , the projection operation above can be represented as

Refresher: Why differs from

Now, we know that , but because is not a square matrix, left inverses don't necessairly mean right inverses. You can imagine if were square, "projecting" a vector does nothing! this makes sense, because this square matrix would define the entire vector space, not the subspace!

Using projection

Linear regression

- you can perform linear regression by projecting onto the subspace formed by . By doing this, you will come up with an such that is minimized. This is because projections minimize distance!

Closest distance between two parameterized lines

- if you have and , to minimize the distance, you're essentially minimizing the following:

And this is exactly the same as projecting the vector onto the span of . And we know exactly how to do this!

- you can also find the closeset distance between two parameterized (and skew) lines by understanding that parallel planes pass through these lines, and you can find the distance between the parallel planes by adding components of the orthogonal vector (i.e. literally "shifting" the plane and then figuring out how much you shifted)

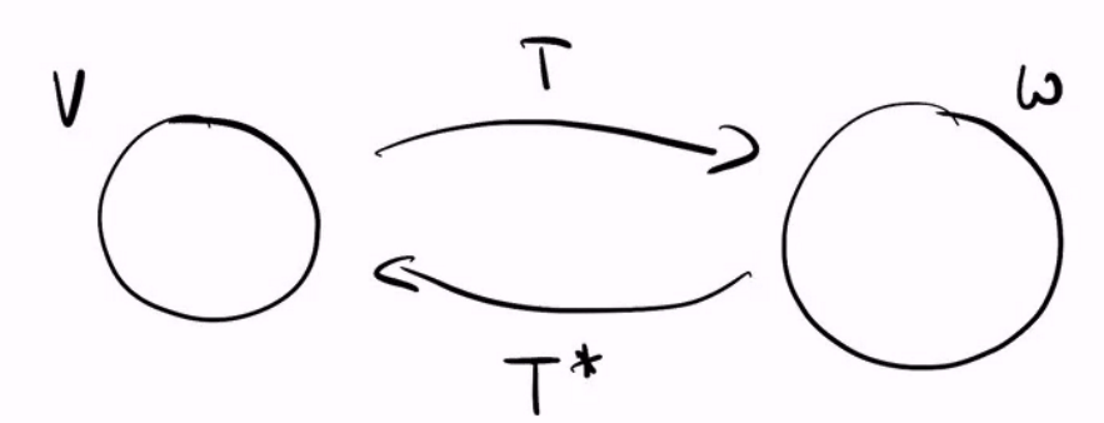

Adjoints

. This is the property of an adjoint map

Now, this should be ringing alarm bells in your head, because this seems dangerously like dual maps, because it is!

Matrix of the adjoint map

Without getting to much in depth, the adjoint matrix is the conjugate transpose of the normal matrix. Again, adjoint maps are literally just like the dual map. There are differences, but it’s not incredibly important.

Properties of the adjoint

-

-

-

-

-

Null and range

again, this is literally just like the dual space, so the same things hole

- (relationshp between two of the four fundamental subspaces)

- (the second relationshp between two of the four fundametnal subspaces)

Self-adjoint operator

. This means that must be conjugate-symmetric!

Every eigenvalue of a self-adjoint operator is real, and all eigenvectors with distinct eigenvalues of a self-adjoint matrix is orthogonal. You can prove this by using the inner product definitions of a self-adjoint operator.

Normal operators

A normal operator is defined as one that commutes with its adjoint . Obviously, every self-adjoint operator is normal.

In general, if for all , then is normal (and vice versa) You can show this by using the inner product definition of a norm.

- as a consequence, if is normal, then have the same eigenvectors with eigenvalues of conjugates of each other.