Inner Product & Norms

| Tags |

|---|

Reisz representation theorem

Every linear functional can be represented as an inner product . this is actually how we got to the idea of a linear functional, but formally, the linear functional comes before the inner product.

Inner products are actually derived from dual spaces.

Inner product spaces

Inner products can technically be anything, as long as it satisfies five properties. Dot product is one example.

- positivity:

- definiteness:

- Additivity:

- works for the second position too

- Homogenity:

- (note the bar)

- Conjugate symmetry: (

\overline)

Aside: Dot product with complex numbers

- you want to satisfy , and you know that , so it naturally follows that

Aside: Dot products with functions

You can define , and it satisfies the properties above. This works with any sort of multiplier: is still an inner product definition. This is not just a toy example; this sort of inner product is used in fourier transforms!

You can take the norm of a function! We can also use the idea that two functions are "orthogonal" to each other.

Derivable properties (and identities)

- .

-

-

- This is because .

-

- .

Inner products of matrices

The corresponding norm of a matrix is the Frobenius Norm, which means that the inner product of matrices is naturally defined as

Norms

The norm of something is defined as . Two items are orthogonal if . Therefore, norms are just a result of some inner product space. Norms satisfy these properties

- non-negativity

- definiteness

- homogenity

- triangle inequality

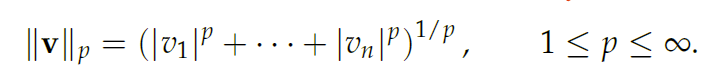

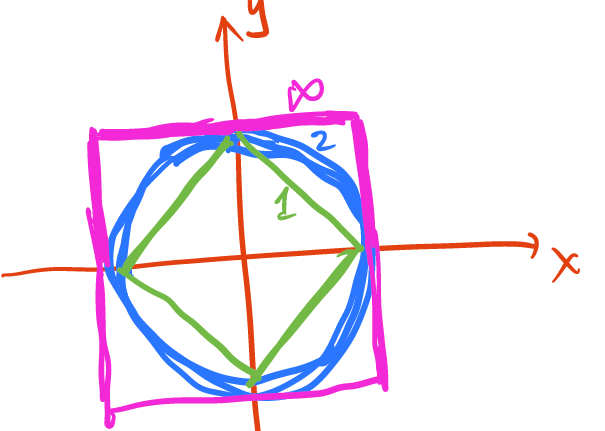

Holder norm (p-norm)

- If , you have your standard Euclidian norm

- If p = 1, then you just have the sum of the absolute values of the components, known as the

Manhattan norm

- If , then you have (known as the infinity norm, and it selects the largest value)

Frobenius Norm (matrix)

Frobenius norm is defined in terms of a frobenius inner product, i.e. . The frobenius norm is just . By element, it is also . This also means that

The norm satisfies all properties of the norm

- special property: if is a rigid transformation, then . Proof: .

- From this, we derive that the norm of a rank matrix can be reduced into the norm of a matrix, or even a matrix if you desire.

- Frobenius norm of a non-square matrix is still defined because of the .

- Like other norms, .

Moral of the story here: if you want matrix-level properties, use the trace definition. If you want element-level properties, use the direct sum of squares definition

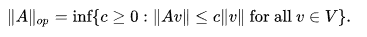

Operator Norm (matrix)

We define as the operator norm. The operator norm states that

The operator norm is the same as the maximum eigenvalue of a matrix.

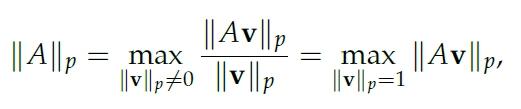

Matrix p-norms (matrix)

Essentially, find the vector that grows the longest with this transformation, using the p-norm (see previous section) as the metric

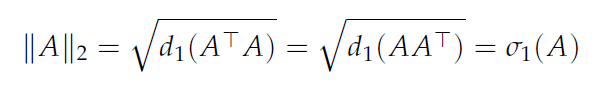

Spectral Norm of Matrix

Derivable Norm / Inner Product Properties

Pythagorean theorem

for any that are orthogonal. The proof is just an expansion of the norm into inner products and using inner product properties

Cauchy Schwarz

This has a ton of implications, because it generalizes to all inner products, including integrals. The proof involves decomposing into and then expanding out the right hand side in these new terms and applying pythagorean theorem.

Holder Inequality

The CS theorem is actually a specific form of the Holder Inequality, which applies as follows (where the subscript is the Holder norm)

Triangle inequality

You can prove this by using the inner product defintion and applying the Cauchy Schwarz inequality to get the inequality

Parallelogram Equality

The proof is just manipulating a lot of inner products around

Dual Norm

The dual norm to some norm is defined as

Intuitively, we take the boundary of the norm and see how to maximize the inner product.

- Dual of l2 is l2 (this should be obvious)

- Dual of l1 is infinity norm (intuitively: pick the coordinate that is the largest)

This dual norm property is a result of Holder’s inequality