Fano’s and Data Processing Inequality

| Tags | BasicsEE 276 |

|---|

Fano’s Inequality

Suppose that you knew a sample from and you wanted to make an inference about , a random variable correlated with . Can we say something about the fundamental limits of prediction?

The intuition

We can only estimate with perfect accuracy if , i.e. observing a removes all entropy from . So intuitively, the limits have to do with this conditional entropy.

The Theorem 🐧

Consider a markov chain .

Let’s let . We claim that

which can be weakened (by letting H(Pe) = 1) into

Proof (using an additional indicator RV and using some neat properties)

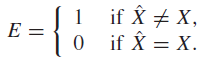

Let be an indicator function, i.e. a bernoulli with probability .

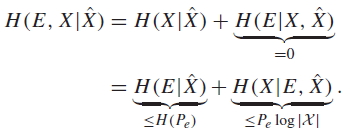

And let’s consider the quantity . We can expand it two ways

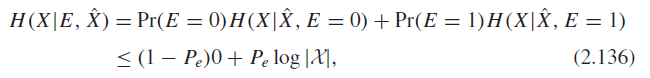

The first zero is true because E is a function of the two X’s. The first inequality is true just due to entropy rules, and the second one is true using the following expansion

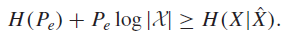

The first component is zero because if E = 0, then X = X, and nothing is uncertain. Putting this together, we have

And we can use the data processing inequality to derive that , and so we are done.

Data Processing Inequality

A markov chain happens when we have , where each variable is indepdent of all previous variables conditioned on the immediately previous one (markov assumption)

Remember that implies that , as they are all active paths.

The inequality 🐧

The inequality states that if , then . In other words, you can’t gain information along the markov chain.

Proof (MI chain rule)

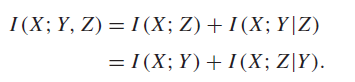

By the chain rule we get

and by the Markov assumption we know that , and MI is non-negative, so as desired. It is also obvious why the equality condition holds here.

Corollary 1: Equality happens if .

Corollary 2: . This means that looking downstream will “spoil” the information upstream, which makes sense.

Now, due to the symmetry of the Markov chain, we also have , because we can just use the factor , and the invariance of order in mutual information. In this case, the path is shorter than , so the mutual information is larger.