Divergences

| Tags | Basics |

|---|

Fantastic Divergences and Where to Find Them

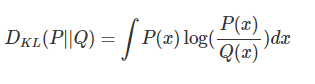

KL

- relative entropy

- not a metric because it is not symmetric and fails triangle inequality

- The P is the true data and Q is the predicted. The support of Q must be larger than P for this to work

Cross Entropy

Just entropy except the log term is predicted. Or, you can understand it as KL divergence except there is no P(X) in the numerator:

You can interpret KL as cross entropy that regularizes for ’s entropy. This is important if you’re fitting , or else you will create a very narrow distribution.

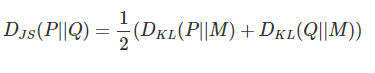

Jensen-Shannon

This is just

where is

Unlike KL, JS divergence is a true metric.

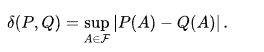

TV Divergence (Total Variation Distance)

This is basically “how much can the density differ?”. Note that this doesn’t have to be bounded. Consider the Dirac Delta function, for example.

TV and KL are related through the Pinsker Inequality.