Math refresher

| Tags | CS 236 |

|---|

Advice

- The form isn’t necessarily KL divergence, although it takes a similar form

- For more complicated proofs, it helps to work backwards from the more complicated answer

- write out the expectation as a summation

Math review, proof tips

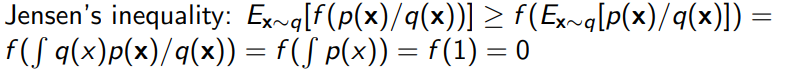

Jensen’s inequality: , opposite way around for convex functions. Useful because usually putting the log inside can create a simpler expression. This is your friend.

Mapping between distributions: , where is potentially a simpler function, is a mapping from simple to complicated, and is the complicated distribution

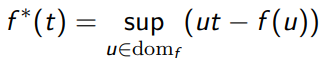

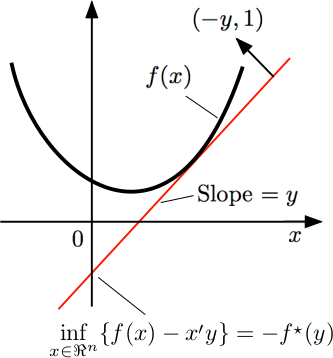

Fenchel conjugate: the convex conjugate of a function: .

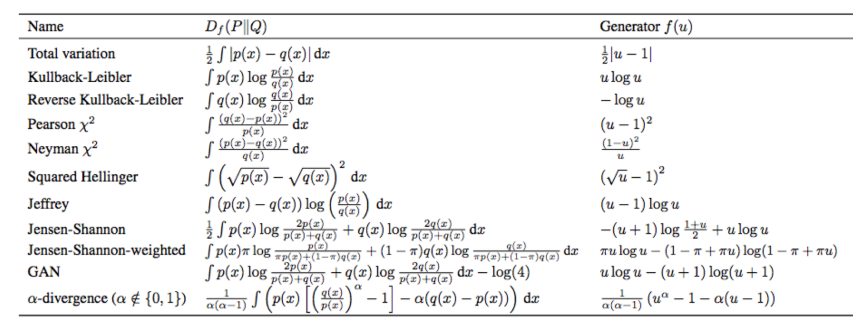

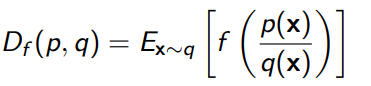

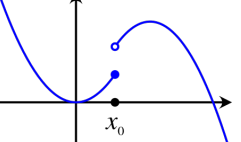

F-divergence: Anything of the form . The is lower-semicontinuous, i.e. points below are continuous. F-divergences are alwayas greater than zero.

KL divergence:

Bayes rule (duh):

Subtract a KL trick: you know that KL is never negative, so if you can add / subtract, you can provide a bound.

Showing minimization, etc: see if you can rearrange into KL divergence or some other f-divergence. To do this, start with the difference (i.e. the optimal should be 0).

Distribution tricks

- marginalize → summation → potentially expectation

- Expand: . See if this gets you anywhere

- Marginalize: .

Expectation

- You can add an expectation out of nothing at all . Look for these to turn functions into expectations

- Can write marginalization process as expectation:

- Expectation is linear, like gradient and integral

- log-probabilities allow you to factor like sums

- Expectation out of summation if there’s a distribution. You can also use a surrogate distribution: .

- Tower property: . More formally, it’s actually , but in most cases this is implied.

Some Basics

Jensen’s inequality

- (when in doubt, remember that expectation is the secant line)

Lower bound computation

- use Jensen’s inequality or subtracting a positive value (for example, KL)

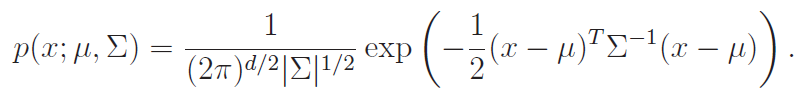

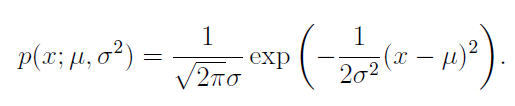

Gaussian formula

Expectation properties

-

Divergences

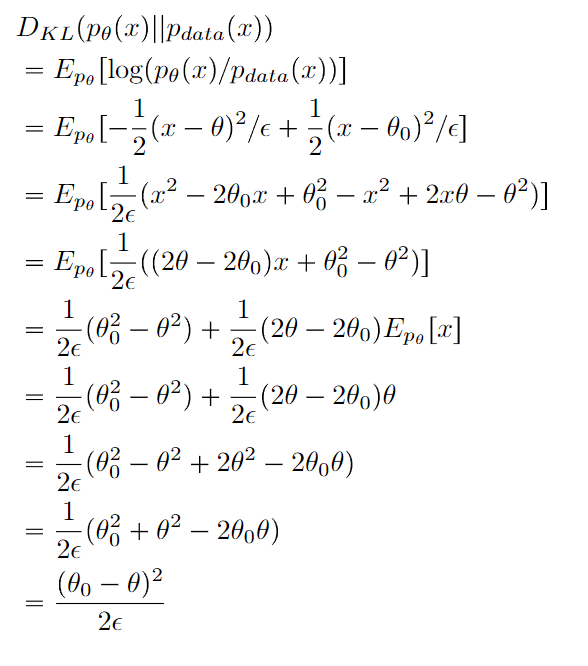

The KL divergence of two same-variance gaussians is simply the scaled square distance of the means

Estimators

An unbiased estimator of some parameter means that (and some convergence stuff surround this assumpation). Just because is an unbiased estimator doesn’t mean that will be an unbiased estimator of . It’s true for linear functions but not generally true for all functions.

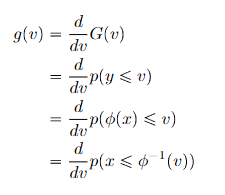

Mapping between distributions / functions

This is a classic problem and it’s very tricky to understand. Here’s the setup: you have some known , some invertible mapping function , and you want to find as a function of .

Non-distribution setup

Without the question of distribution, this is actually really simple.

You take your and you map it into the domain that works for . No biggie.

Distribution-based setup

Why doesn’t this work if is a density function? It has to do with density. Imagine the were rubber bands. if you have to stretch or shrink the rubber band in your mapping of , then you run into the problem of having the same density with different scales, leading to a change in the integral.

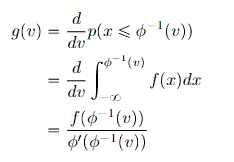

More formally, if , we’ve got a problem that we can solve with standard change of variable techniques. In calculus, we implemented these techniques when we mapped between two domains (u-substitution) but we wanted to keep the integral equivalent.

- Start with

- Find

- Compute in terms of , getting you the final answer

From this, we can derive a general rule (using the derivative of the inverse function general form):

Generalization to vector-distributions

For vector distributions, we are dealing with a multi-variable transformation. This transformation, like the one-dimensional case, must be invertible. For a matrix, it must be full-rank.

Here, instead of worrying about vs , we are worried about mapping onto , where is some unit element. As we’ve learned in multivariate calculus, the convenient notion is the Jacobian determinant, i.e.

Note how the forms are super similar; we just replace the derivative with the determinant of the jacobian. Recall that the jacobian describes how the unit-area stretches or shrinks with the transformation, so this is intuitive. So you’re almost “paying tax” by “unshrinking” from to before you apply the function.

Intuition

Let’s jump directly to the higher dimension.

- we know represents how much the space stretches as we apply the transformation from .

- is the density in X-space. If the Y→X increases, everything is naturally divided by as we go into X-land. Therefore, to reverse this process, we multiply the value by

- If Y→X decreases, the same logic applies, just with a that is less than 1

You can think of a transformation as naturally dividing by the “stretch” factor , and you need to compensate as you map it back.

Worked example

If we have known and we have a good function , then we can compute . Often, we get at the start, which is fine. So, you just scale your values by . If the operation is linear, this is a constant

- rule of thumb: if is a tighter domain, expect to be smaller. If is a looser domain, expect to be larger.

Convexity

Fenchel Conjugate

Any function has a convex conjugate known as the Fenchel conjugate

This is a generalization of Lagrangian duality, but intuitively, this is a convex hull to the function.

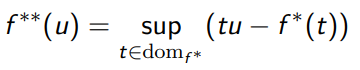

This is convex and lower semi-continuous. We can take more Fenchel conjugates, i.e. you can take the second Fenchel Conjugate

Properties of Fenchel Conjugate

We have . If is convex and lower semi-continuous, .

Proof (definition)

We know that , which means that . Therefore, .

F-divergences

Given two densities, we define a general f-divergence as

where is any convex, lower-semicontinuous function with . Lower-semicontinuous basically means that around , every point that is below must be continuous. Every point above is fine. This looks like the following diagram:

Properties of F-divergences

Always greater than zero

Examples of F-divergences

There are so many types of F-divergences, with a common type being KL divergence, where (careful! It’s not because it’s , not . ) Total Variation is also a common one, where the