Skill Discovery

| Tags | CS 224R |

|---|

Skill Discovery

Soft Q-learning

There’s more theory in the Berkeley version, but essentially we replace the maximizatoin with an with some very nice theoretical background. But more practically, it yields better exploration, finetunability, and robustness.

Diversifying Skills

Condition your policy on some style variable , but you want to be careful. How can you be certain that these skills are diverse?

Soft Q-learning increases action entropy, but this is NOT the same as state entropy. Our objective is different

- High entropy across different ’s, i.e. maximize

- Low entropy once we observe , i.e. minimize

This naturally pushes us towards the information objective . The first term is maximized with a uniform prior. The second term is minimized with sharp distributions, so we propose

as the reward for the policy. The idea here is that we want to maximally separate out the policies.

Using discovered skills

So with this policy that uses , you can learn a policy on top of this policy that only learns how to manipulate . This allows for a policy that deals with a higher level of abstraction, yielding an easier learning experience. But this is actually quite naive. Can we do better?

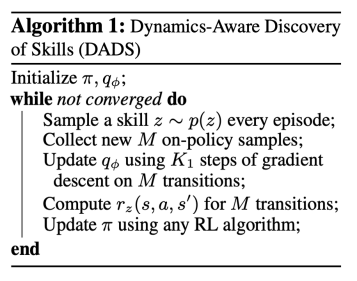

In the previous paradigm, we didn’t know if a skill is useful or not. Instead, we turn to a slightly different objective. Can we condition the MI on the current state?

How does that help? Well, it means that this informs the dynamics of the state the best. Intuitively, this means that every will pick something that is meaningful and consistent.

This yields far more consistent policies. And because we learn a dynamics model, we can now use it in classic control.

Hierarchical RL

Why Hierarchical? Well, that’s how we learn. We start with the simplest techniques, and then we build it up into complicated behaviors.

There is a ton of work in this field, so this will only be a survey

- Have a slower feed a style vector to a larger pi.

- You can ask for data to be relabeled