ALGORITHMS Overview

| Tags | CS 234 |

|---|

Tabular Value Algorithms

These algorithms work without functional approximation, i.e. we assume a finite number of states and actions (it’s called “tabular” because you keep a table of Q and V).

Learning from a Model

These algorithms require an explicit model . There is no issue of exploration, and it’s a problem of effective planning.

Policy iteration: use policy-bellman backups to get , and then create a Q function from the V. Then, take the argmax of this Q to get your policy.

Value Iteration: apply max-bellman backup incrementally to get , which you can then use to derive .

Both have guaranteed, unique convergence, although the proofs are different because the setup is different. Policy iteration has bounded steps but value iteration does not.

Model Free Eval and Control

Under these circumstances, we don’t know , so we introduce the problem of exploration. For the non-model methods below, we use epsilon-greedy.

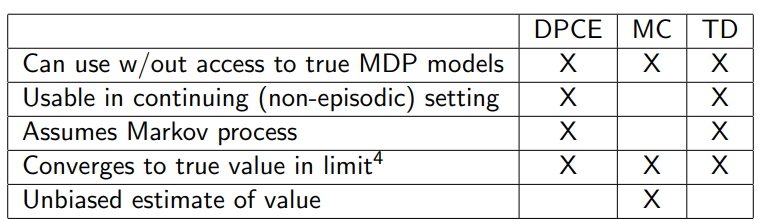

Monte Carlo: roll out in the environment, then use these estimates on a Q function, then run argmax control. For pure eval, you can use a V function. High variance, but unbiased and does not assume markov property.

TD learning (SARSA): Use the insight that , which is good for evaluation. For control, use , which is the SARSA algorithm. This is on-policy.

Q Learning: the same as TD learning, but this time we do . This yields a slightly different Q function algorithm if we are using a non-zero epsilon. Off-policy.

Certainty Equivalence: use interactions to learn models , then run policy iteration / value iteration.

N-step returns: add more terms to the TD learning, which increases variance but decreases bias.

Good convergence guarantees with GLIE. For SARSA and Q Learning, we also need to satisfy the Robbins-Munro sequence rule.

Function Approximation Value Methods

In these methods, we use some parameterized function to approximate the Q or the V. In general, Value methods are best for discrete actions.

We just replace equality with a squared loss. For MC, we have the loss as , for SARSA we have , and for Q learning, we have

DQN: essentially just do Q learning with deep neural networks. Have a replay buffer and a target network for stability. Only for discrete actions.

Double Q Learning: use one network for maximization, one for evaluation.

Double DQN: instead of using the target network to compute , do the argmax over the current network, and then evaluate this state and action on the target network. Similar to Double Q learning.

Policy Search

These algorithms improve the policy directly, which as benefits. You can have a meaningful stochastic policy, and you can also easily deal with continuous action spaces.

Policy Gradient: The objective is , which is the same as . The gradient is with monte carlo estimate. You can remove past rewards due to causality, which decreases variance. This causality corrected version is called REINFORCE. You can add a baseline to subtract from the rewards to reduce the variance.

Actor-Critic Methods: essentially cases where you see that can be approximated by . So you can train an advantage function using function approximation value methods. This function is the critic, and the policy gradient yields the actor.

TRPO: If we stay within a certain distance of the data generation policy, you can guarantee an improvement in . The reasoning is subtle, but concretely, this means that we either run a constrained optimization, a regularized optimization, or just clip the importance samples if they are too large (PPO). Intuitively, PPO prevents some abnormality from sucking all of the numbers to a few small datapoints (which can happen with importance sampling).

Exploration

These are ways that we can best explore the environment, because this is a pretty large issue for RL agents.

Bandits

Bandits are the simplest problem without any state. You just execute actions with a horizon of 1. How do you get to the best action in the fastest amount of time?

Greedy Algorithm: pick the best estimate. Can have linear regret.

Epsilon greedy: greedy but you also try stuff randomly with probability. Annealed epsilon greedy can be sublinear.

UCB: add a number to your estimate that gives you a certain guarantee that the true value is below this bound with probability . Provably sublinear

Thompson sampling: keep a distribution of possible models for the bandits, sample one at every time step, and then use that model to find the arm to pull. Sublinear

MDPs

MBIE-EB: Add a bonus to the reward that corresponds to the frequency of exploration. You may use a pseudocount due to the curse of dimensionality.

Thompson Sampling sample an MDP every step, and create a value function based on it. or, you can sample a Q function directly.

Offline Learning

Here, we are trying to make a meaningful policy using data that is already collected

Behavior cloning: regress to actions in the dataset. This only works if the data is optimal. May not recover from mistakes, and does not do better than behavior policy.

Linear Feature Reward Inverse RL: You’re trying to learn a reward function given the data. You essentially create the most entropic policy given a reward parameterization, and you’re trying to parameterize a reward whose entropic policy yields the highest likelihood of the observed trajectories.

Fitted Q Iteration: Similar idea to SARSA, and we’re trying to get the value of a policy by using the data collected from a different behavior policy. Or, you can use a Q learning approach directly and try to get from the data.