Interior Point Methods

| Tags | Algorithms |

|---|

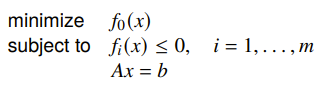

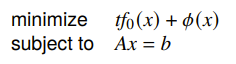

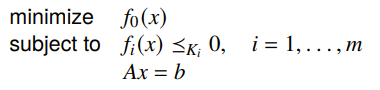

Inequality Constrained Minimization

We have the following setup

where is convex. How do we solve this sort of problem?

Assumptions

We assume that the problem is strictly feasible, which means that Slater’s constraint holds, meaning that there exists some that satisfy the KKT conditions

Log-barrier and Central Path

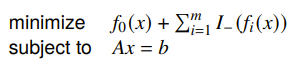

We might start by thinking that we could turn the inequality constraint into the objective by adding an infinity-indicator function

Now, this would technically run, but it would be terrible. The method would just break every time it hits the barrier. But what if we make it smooth?

Log-barrier

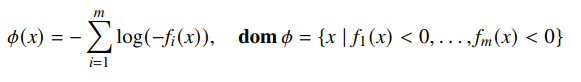

This is where the log-barrier comes in, which is a function that we’ve seen before.

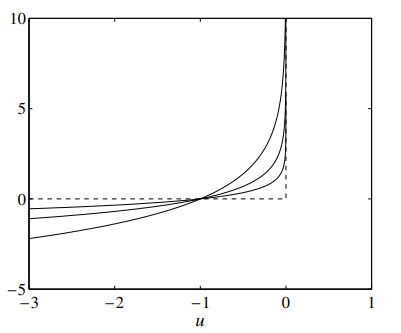

This function is effectively approximating the indicator function but in a smooth way.

This function is convex from composition, and it is also twice continuously differentiable, which is good because we want to apply something like Newton’s method

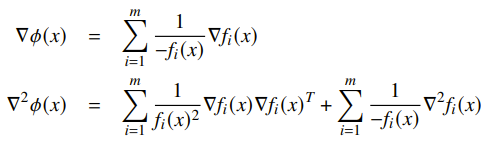

The approximation

We can approximate the indicator function using the log-barrier. It is no longer the same problem, but it has the same properties.

Or, namely: the larger the the closer we are to the original problem, but the sharper the bend. It’s a tradeoff.

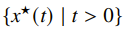

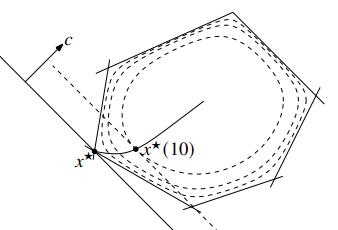

Central path

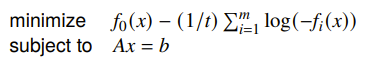

Let be the solution of

If exists and is unique, then the central path is the set

Intuitively, as grows larger, we get closer to the true optimal by edging closer and closer to the edge of the feasible space. Here’s an LP as an example:

Note that here, the path does change with irrelevant constraints because these constraints still change the different forces at play.

Duality Interpretation

There’s an interesting connection to duality. Recall that we set up the dual problem by essentially adding a “soft” penalty through the . Now, let’s talk about duality of this log-barrier problem.

Establishing a lower bound on optimality

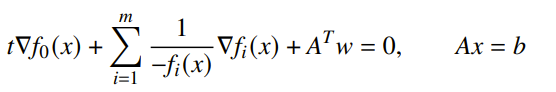

Because of strong duality for every , we can just use the KKT conditions to assert that is optimal if there exists some such that

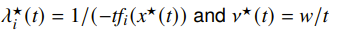

Every central point has a dual feasible point too. Let’s define

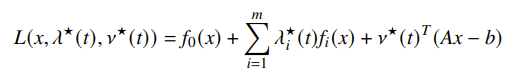

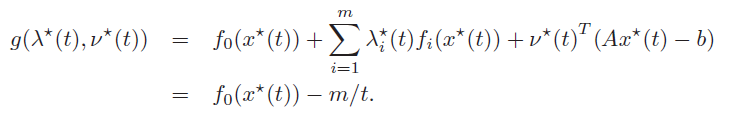

We assert that this choice is a dual feasible point. To prove this, we plug this choice into the Lagrangian

If we simplify and attempt to maximize, we’ll actually get the exact equation for that we got from strong duality. This tells us that minimizes the Lagrangian. This in turn tells us that

which immediately gives us a very nice bound on the optimal value:

Interpretation through KKT

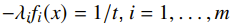

If we let , then we satisfy KKT conditions, except that we have “approximate” complementary slackness

that becomes true slackness when . This interpretation comes from just rearranging our definitions of .

Force field interpretation

You can imagine as the potential of the force field that pushes us to optimize the real objective, and is the force field that pushes you to avoid the boundary. The forces balance at one point, and this balancing shifts with .

Barrier Method

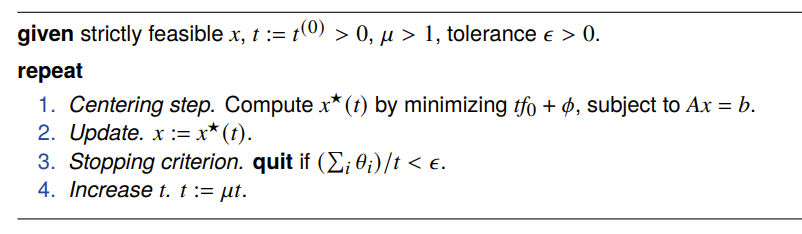

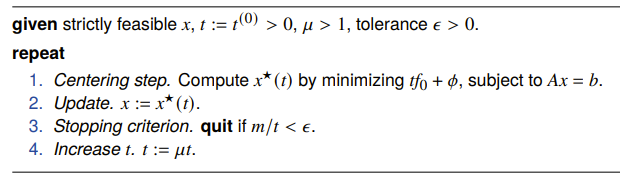

The barrier method takes the insights we made in the previous section and just turns it into an algorithm. You iteratively compute by minimizing with , and then you just keep on doing this until you get close enough.

The stopping criterion is inspired by the duality gap. The centering step might be complicated, so we turn to Phase 1 methods that can get this job done.

Phase 1 Methods

The Barrier method requires a strictly feasible starting point, but what happens if that’s hard to find? Here, we consider the phase 1 methods, which forms an optimization problem that is strictly feasible and finds a strictly feasible point for the original problem. If none exists, then it tells you where the problems are.

Slack variable formulation

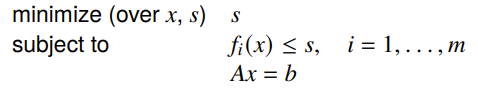

The idea is this: introduce a slack variable for each constraint and minimize

You can pick such that your initial choice of makes this problem feasible. Then, after running everything, as long as all is less than zero, you’ve got a feasible original problem. You can take the you obtained from the problem and use it to initialize the barrier method.

To prove infeasibility, we can use the dual variable. If we find some dual feasible point with a positive dual objective, then we know that and therefore it must be impossible.

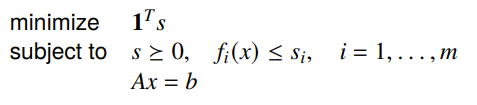

Sum of infeasibilities methods

Instead of finding a single slack variable, you minimize the sum of a slack variable set. This is useful because it can tell you which inequalities are impossible to satisfy.

We can also weigh the different slack variables by the priorities in satisfying the constraints. Generally, this approach can find better solutions.

Complexity of barrier method

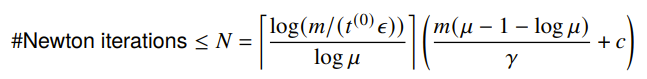

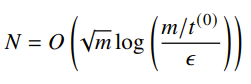

There’s a lot of not-so-fun math, but the final result is that the number of iterations is

As increases, the iterations decreases. If we set , we have

which means that the barrier method is polynomial in , the number of variables.

Generalized inequalities

What if we want to satisfy some other type of inequality, i.e. what if the functions are not scalar valued?

General logarithm

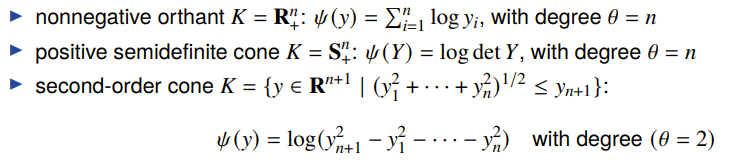

We define as the generalized logarithm for a proper cone if…

- The domain is the interior of

- The hessian of is strictly negative definite

- we have where is the degree of .

There are many formulations of this (unlike the single formulation of the log barrier), including these examples:

Log barrier and central path

You just replace the logarithm with the general logarithm in the barrier function